Discovering the intersection of AI computing and escalating market trends, the reliance on optical modules has surged. From high-scale computational scenarios in AI-powered systems to market forecasts propelling technological advancements, the landscape is evolving. Let’s delve into the interplay of AI, market projections, and groundbreaking optical innovations, reshaping the future of computational networking

Section 1, AI Computing Scenario Optical Module Application

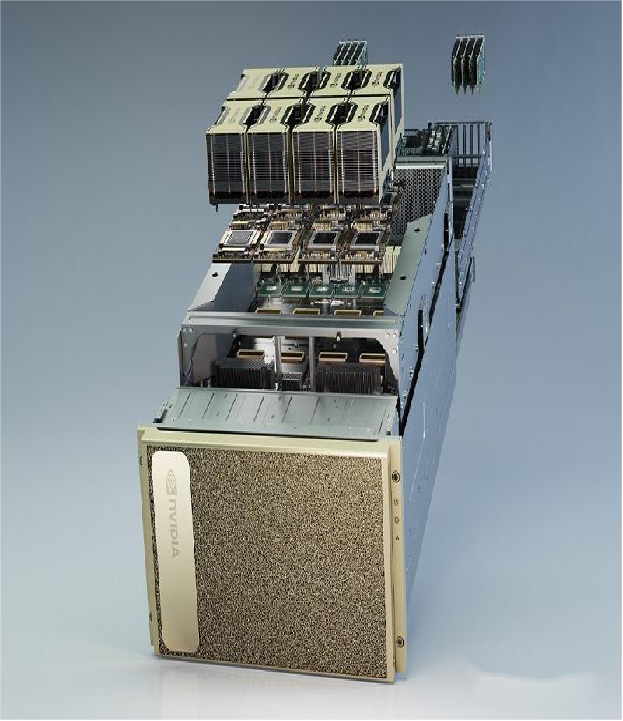

In the usage of NVIDIA’s A100 systems, such as within the NVIDIA DGX SuperPOD, concerning the application of optical modules required for computation and storage sides, all cables for both computation and storage sides utilize NVIDIA MF1S00-HxxxE series AOC active optical cables. Consequently, each port requires an optical module, meaning each cable corresponds to two optical modules. Therefore, the computation and storage sides total required amounts to (1120+1124+1120)*2 + (280+92+288)*2 = 8048 optical modules. In other words, the approximate quantity of 200G optical modules needed per GPU is about 1:7.2.

DGX GH200 supercomputer, equipped with 256 NVIDIA GH200 Grace Hopper super chips, each of which can be regarded as a server, interconnected through NVLINK SWITCH. Structurally, the DGX GH200 adopts a two-tiered fat tree topology, with the first and second tiers employing 96 and 36 switches, respectively. Each NVLINK SWITCH has 32 ports operating at a speed of 800G. Additionally, the DGX GH200 is equipped with 24 NVIDIA Quantum-2 QM9700 IB switches for the IB network.

For estimation based on ports, given the closer proximity of L1 tier, it’s assumed that copper cables are used for connectivity without involving optical modules. For the L2 tier with 36 switches in a non-converging fat tree architecture, each switch’s ports connect downwards to the uplink ports of L1 tier switches. Therefore, a total of 36 * 32 * 2 = 1152 units of 800G optical modules are needed. In the IB network architecture, the 24 switches require 24 * 32 = 768 units of 800G optical modules. Thus, the DGX GH200 supercomputer requires a total of 1152 + 768 = 1920 units of 800G optical modules, corresponding to 12 units of 800G optical modules per GH200 chip.

Section 2, Optical Module Market Demand Forecast Driven by Computational Networking

The significant surge in computing power demand driven by new-generation information technologies like cloud computing, artificial intelligence, and big data has hastened the development of cloud computing infrastructure. Concurrently, with the comprehensive launch of projects such as the “Eastern Data and Western Computing,” China’s computational infrastructure construction is rapidly advancing. Optical modules, as pivotal components in cloud computing data centers, will experience a continuous surge in market demand due to the substantial increase in data transmission volume.

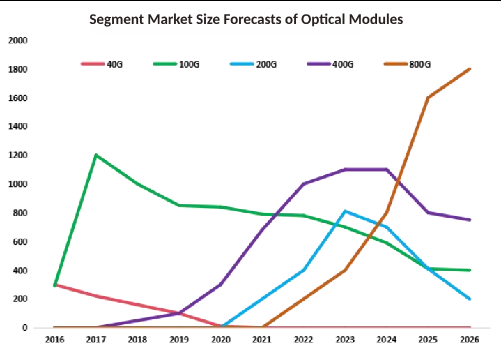

According to LightCounting’s projections, the global adoption rate of 800G technology is expected to be merely 0.62% by 2023. Large AI models like ChatGPT impose new demands on data flows within and outside data centers, potentially accelerating the transition of optical modules towards 800G. It’s anticipated that 800G optical modules will start dominating the market by the end of 2025.

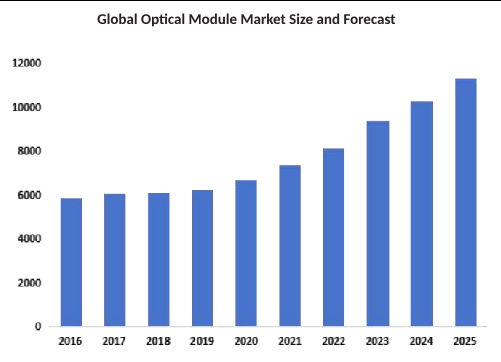

Based on LightCounting’s data, the global market size for optical modules increased from $58.6 billion in 2016 to $66.7 billion in 2020. Projections suggest that by 2025, the global optical module market will reach $11.3 billion, 1.7 times the size in 2020. Structurally, the data communication market is expected to hold around 60%, while the telecommunications market will encompass approximately 40% of the market share.

GIGALIGHT Optical Modules for Computational Applications

The core technology of GIGALIGHT’s silicon optical modules lies in its innovative packaging design with high-coupling efficiency in the free-space COB and the MZI software locking algorithm. Additionally, in terms of silicon waveguide cores, the company has engaged in collaborative design work for multiple silicon chip designs with partners.