Why Infiniband networks are crucial in high-performance computing data centers?

As high-data throughput applications such as data analysis and machine learning continue to expand at a rapid pace, the demand for high-bandwidth and low-latency interconnect solutions is now extending to a broader range of markets. In response to this growing need, an increasing number of high-performance computing data centers are turning to InfiniBand technology. InfiniBand is a specialized network technology designed to deliver high-speed interconnect capabilities and has gained significant traction in high-performance computing facilities worldwide.

Today we will take an in-depth exploration of InfiniBand technology and its applications in high-performance computing data centers.

Exploring InfiniBand Networks for High Performance Computing Data Centers

Unlocking the Power of InfiniBand Networks for High-Performance Computing Data Centers InfiniBand technology leverages a high-performance input/output (I/O) architecture that allows for the simultaneous connection of various cable switches. With different transmission rates and physical interfaces, InfiniBand cables are typically categorized into four main types: QSFP, QSFP-DD, SFP, and AOC. These cable options provide comprehensive support for the diverse business requirements of high-performance computing data centers.

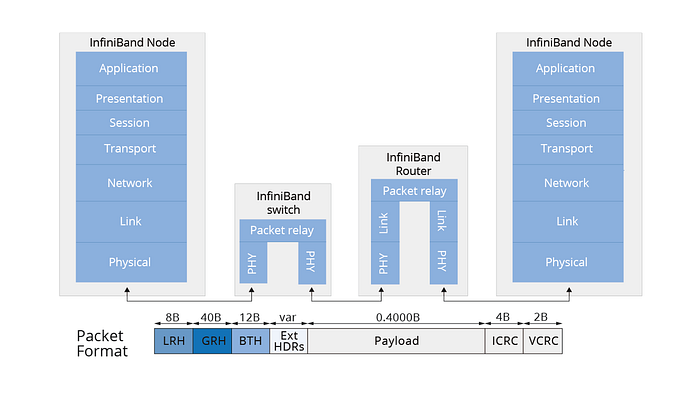

In large-scale computing environments, InfiniBand network models utilize dedicated channels to establish connections and facilitate data transfer between mainframes and peripheral devices. InfiniBand networks boast a maximum data packet size of 4K and offer support for point-to-point and bidirectional serial links. These links can be aggregated into 4X and 12X units, delivering an impressive combined data throughput of up to 300 GB per second.

How InfiniBand Networks Meeting the Demands of High-Performance Computing Data Centers

Today, the internet serves as a vast infrastructure supporting a multitude of data-intensive applications. Large enterprises are constructing high-performance computing data centers to meet the demands of these applications. High-performance computing creates an exceptional system environment by establishing high-bandwidth connections between computational nodes, storage, and analytics systems. Another critical performance metric for high-performance computing architectures is low latency. Therefore, high-performance computing data centers opt for InfiniBand networks to address their requirements for high bandwidth and low latency.

When compared to RoCE and TCP/IP, InfiniBand networks exhibit significant advantages in high-throughput processing environments, especially in scenarios utilizing server virtualization and cloud computing technologies.

The Advantages of InfiniBand Networks in High-Performance Computing Data Centers

The evolution of data communication technology, innovations in internet technology, and enhanced visual representations all benefit from more robust computing, increased capacity, secure storage, and efficient networks. InfiniBand networks offer a unique set of advantages that deliver higher bandwidth network services while reducing latency and minimizing the computational resource load, making them an ideal fit for high-performance computing data centers.

1. Network Efficiency: InfiniBand networks (IB networks) offload protocol processing and data movement from the CPU to the interconnect, significantly enhancing CPU efficiency. This allows rapid processing of high-resolution simulations, large datasets, and highly parallel algorithms. In high-performance computing data centers, this results in substantial performance improvements, benefiting applications like Web 2.0, cloud computing, big data, financial services, virtualized data centers, and storage applications. It reduces task completion times and overall operational costs.

2. InfiniBand Speed: In an era dominated by 100G Ethernet, IB network speeds continue to evolve. GIGALIGHT optical modules support advanced transmission protocols like Remote Direct Memory Access (RDMA), enhancing the efficiency of high-capacity workloads. With InfiniBand technology’s ultra-low latency, GIGALIGHT InfiniBand optical modules significantly boost the interconnectivity of high-performance computing networks and data centers.

3. Scalability: InfiniBand allows the creation of networks with up to 48,000 nodes in a single subnet at Layer 2. Moreover, IB networks do not rely on broadcast mechanisms like ARP, preventing broadcast storms and conserving additional bandwidth.

With these advantages of InfiniBand optical modules, they become the preferred choice for applications in data centers, high-performance computing networks, enterprise core and distribution layers, and various other scenarios.

In Conclusion

In a nutshell, InfiniBand networks simplify high-performance network architecture and reduce latency associated with multi-tiered structures. They provide robust support for bandwidth upgrades at critical computing nodes, helping users in high-performance computing data centers maximize their business performance. In the future, InfiniBand networks are poised to become a developing trend, widely applied across various domains to offer users robust business support.